The rover currently features a fully working tele-operated functionality through wireless keyboard control. The power supply issue was resolved by adding a separate source to power the Raspberry Pi, and the Pi can now also be connecting through serial communication on a shared WiFi network.

Below are two video demos highlighting the following:

- Differential drive for all movement – unique steering algorithm controlled by tele-operated function

- Smooth rotation using controlled speeds and direction, using modular and abstracted programming principles

- Ease of use through simple user interface

Computer Vision Updates

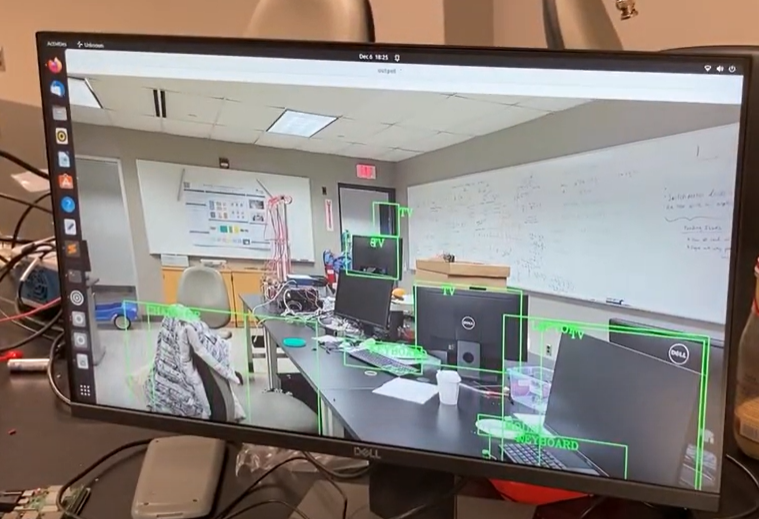

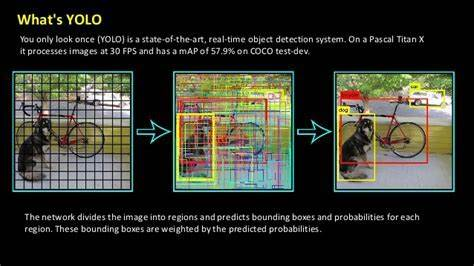

Along with the rover demo we also showed the Computer Vision capabilities of classfication up to 80 different objects using the COCO dataset using the implenettaion of Yolo model. The image below is computer vision screenshot for our rover’s camera view with the object detection python code running on the raspberry pi.

The images below describes how the Yolo model works and what the COCO dataset is.

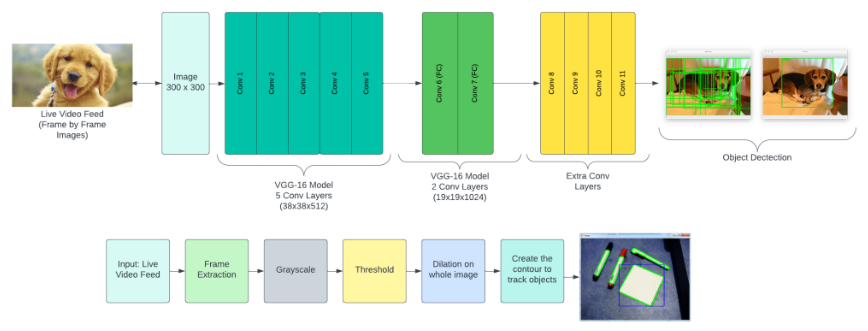

This image belows shows you how the overall system works as a block diagram.