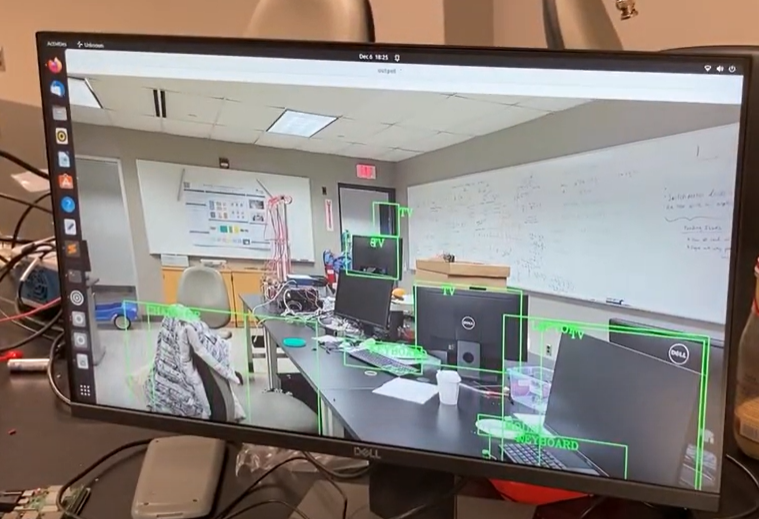

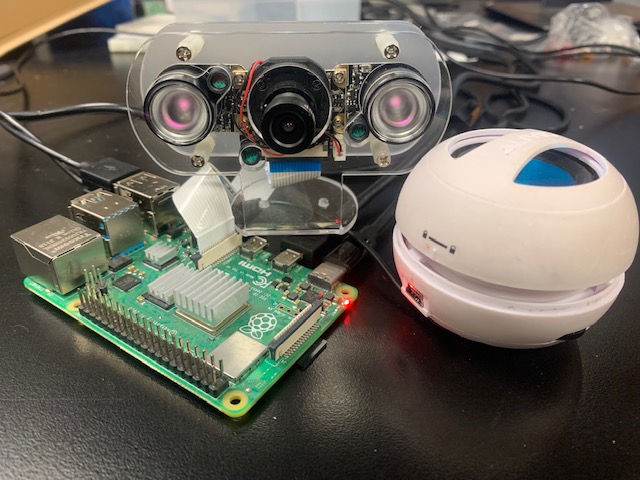

The team was able to successfully integrate the camera, motor, and audio subsystems with the rover drivetrain. A picture of the subsystems and initial integrated prototype are shown below.

We were able to use VNC Viewer to establish a remote desktop connection with the Raspberry Pi wirelessly with an external laptop, in order to allow for improved interfacing capabilities. The preliminary testing results of the new-teleoperated control with that feature is shown below.

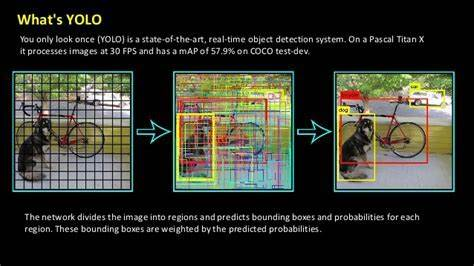

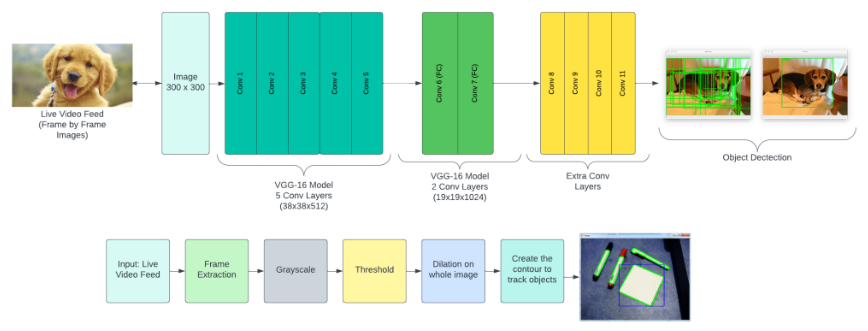

Computer Vision Updates

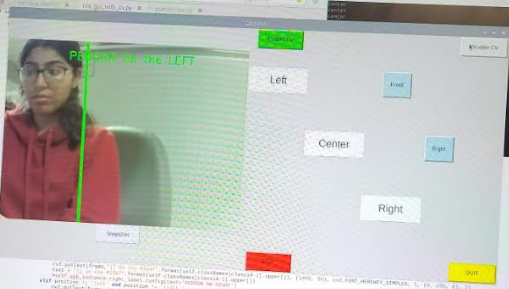

Integrating computer vision with an Arduino motor controller logic has proven successful through the use of the PySerial library. However, due to budget constraints, we were unable to obtain a stereo camera for improved accuracy in locating objects. Nevertheless, our team has focused on creating a remarkable semi-autonomous system, which is now operational. Our system features a single camera, but with the addition of computer vision integrated with a GUI, it provides users with valuable information regarding the location and distance of objects (this measurement is only shown in the termial). This extra guidance enhances the user’s experience and enables smoother operation of the device.

The image below show these features with the GUI interface.