Robotics is an emerging field of technology that has slowly but surely been integrating itself into our society over the years. A key area of robotics study is the development of humanoid robots. Numerous industries around the world are finding many useful applications for robots that look like us, move like us, and talk like us. In our project, we aim to contribute to this important area of study by developing a means for humanoid robots to express accurate facial emotions using a NAO robot.

What is a NAO robot?

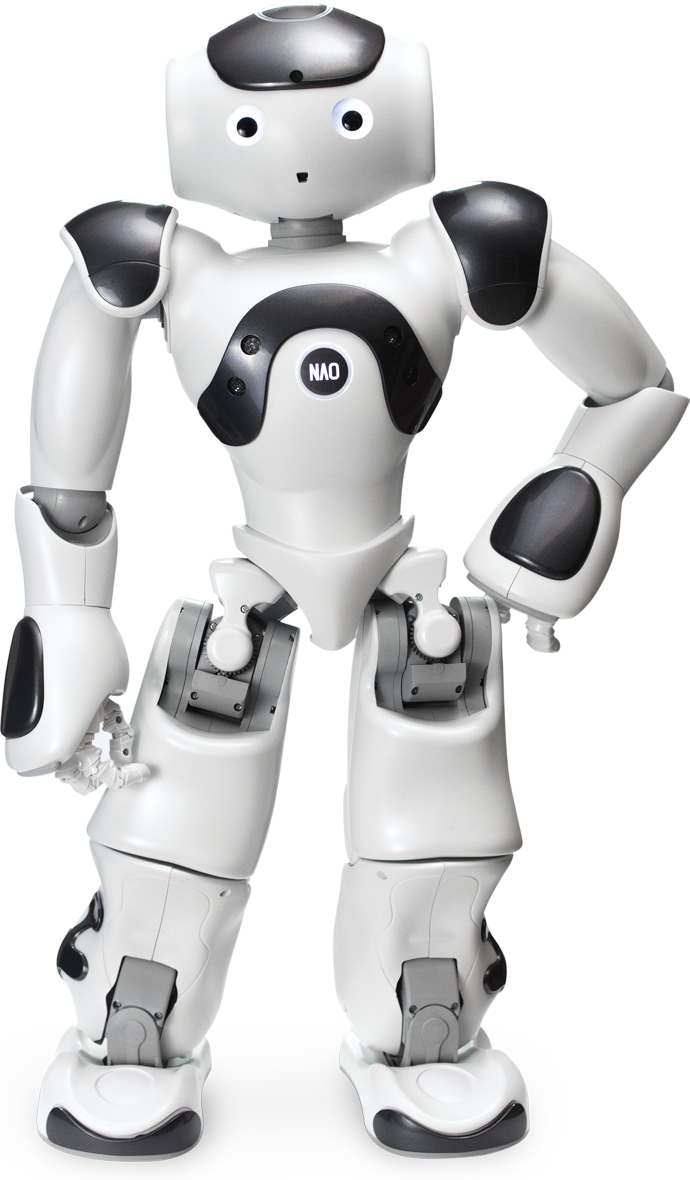

Produced by SoftBank Robotics, NAO is a 58cm-tall programmable humanoid robot featuring 25 degrees of free motor movement, allowing it to express a uniquely “human” range of body motion.

Currently NAO robots are used primarily in education and healthcare. As an education tool, NAO facilitates learning and research, while in the healthcare industry NAO serves as a personable means to assist patients and collect data.

NAO’s human proportions and expressive range of body language make it easy and fun for humans to interact with. These robots are great for expressing emotions with their body; however, expressing facial emotions is one area where NAO falters. While the rest of its body contains dozens of motors, there are no moving parts on NAO’s face, hindering it from expressing convincing facial emotions.

Our Goal

The goal of this project is to significantly enhance NAO’s ability to express facial emotion in reaction to external stimuli. This will be achieved through the creation of a device that will integrate movable eyebrows and lips onto NAO’s face. NAO will listen for specific vocal cues, and when a cue is recognized, a microcontroller will be used to operate small motors and move NAO’s eyebrows and lip piece appropriately to express a specific facial emotion.

Project Deliverables

Project deliverables will include both hardware and software.

Hardware deliverables will include:

- Fabricated mechanical facial features for NAO, consisting of eyebrows and lips

- A fabricated “backpack” for NAO to house a microcontroller and motors, both of which will be used to control the mechanical facial feature

- A fabricated “headset” to secure the facial features on the NAO’s face

Software deliverables will include:

- Python code used to program the NAO to recognize speech and communicate with a microcontroller

- Arduino code which will allow a microcontroller to control the motorized facial features in response to communications received from the NAO robot